About Me

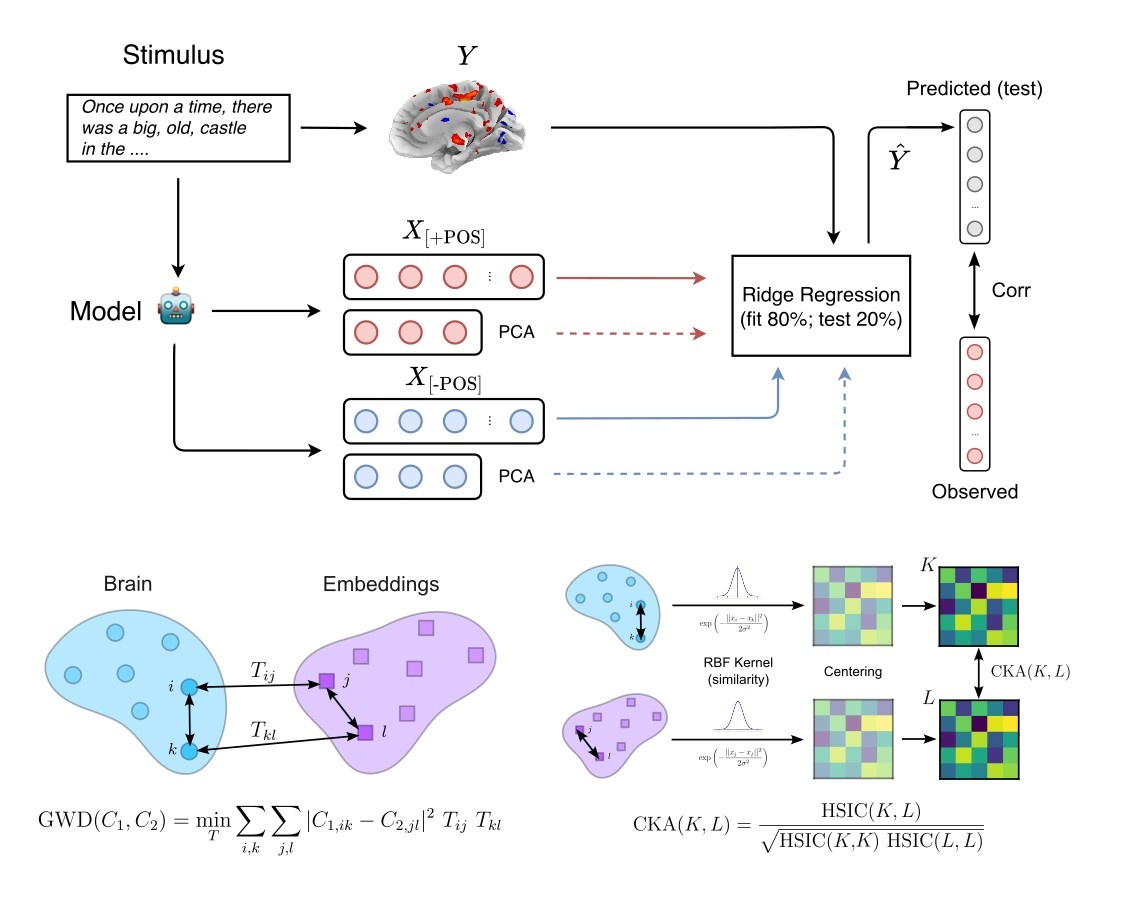

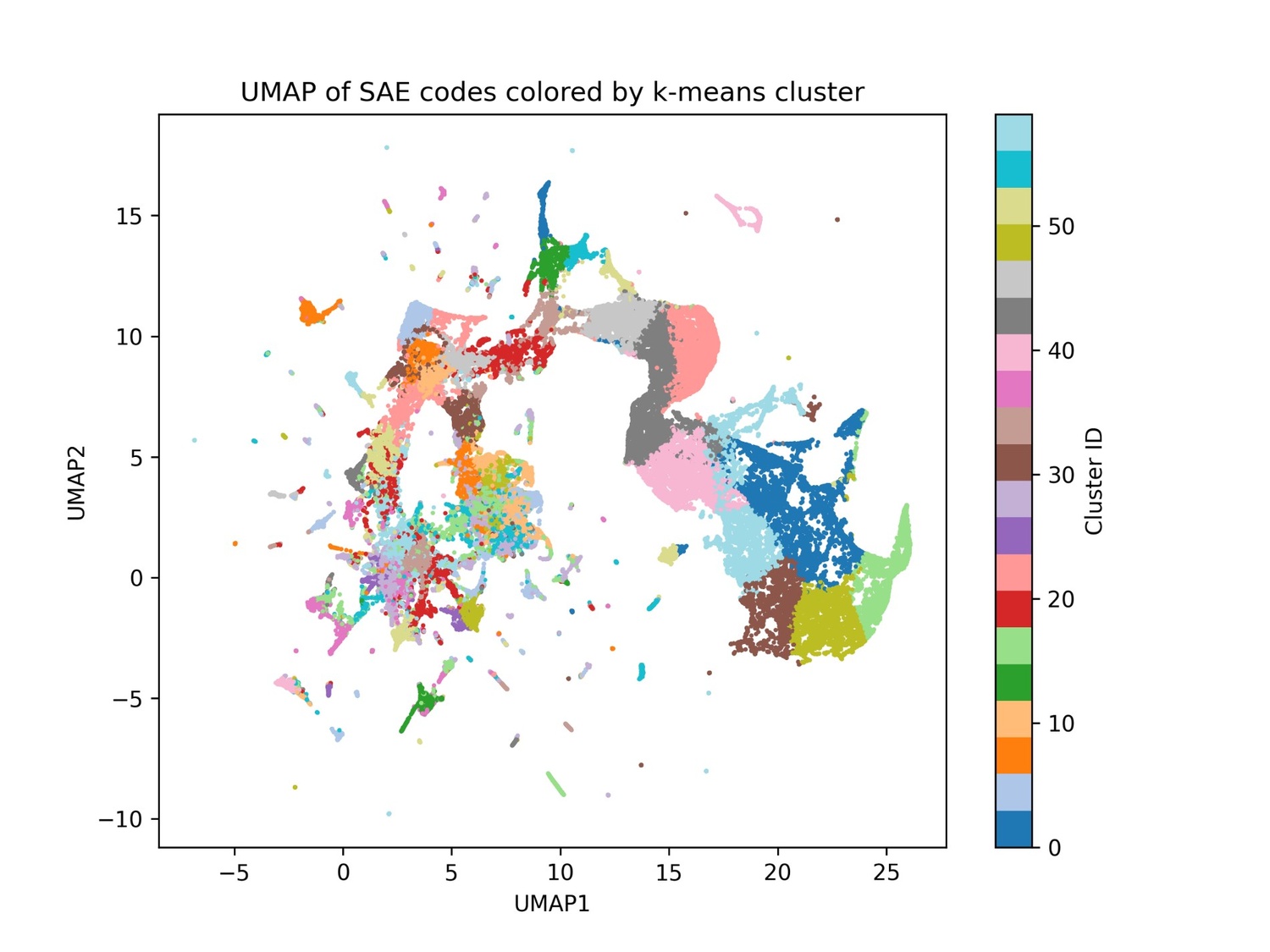

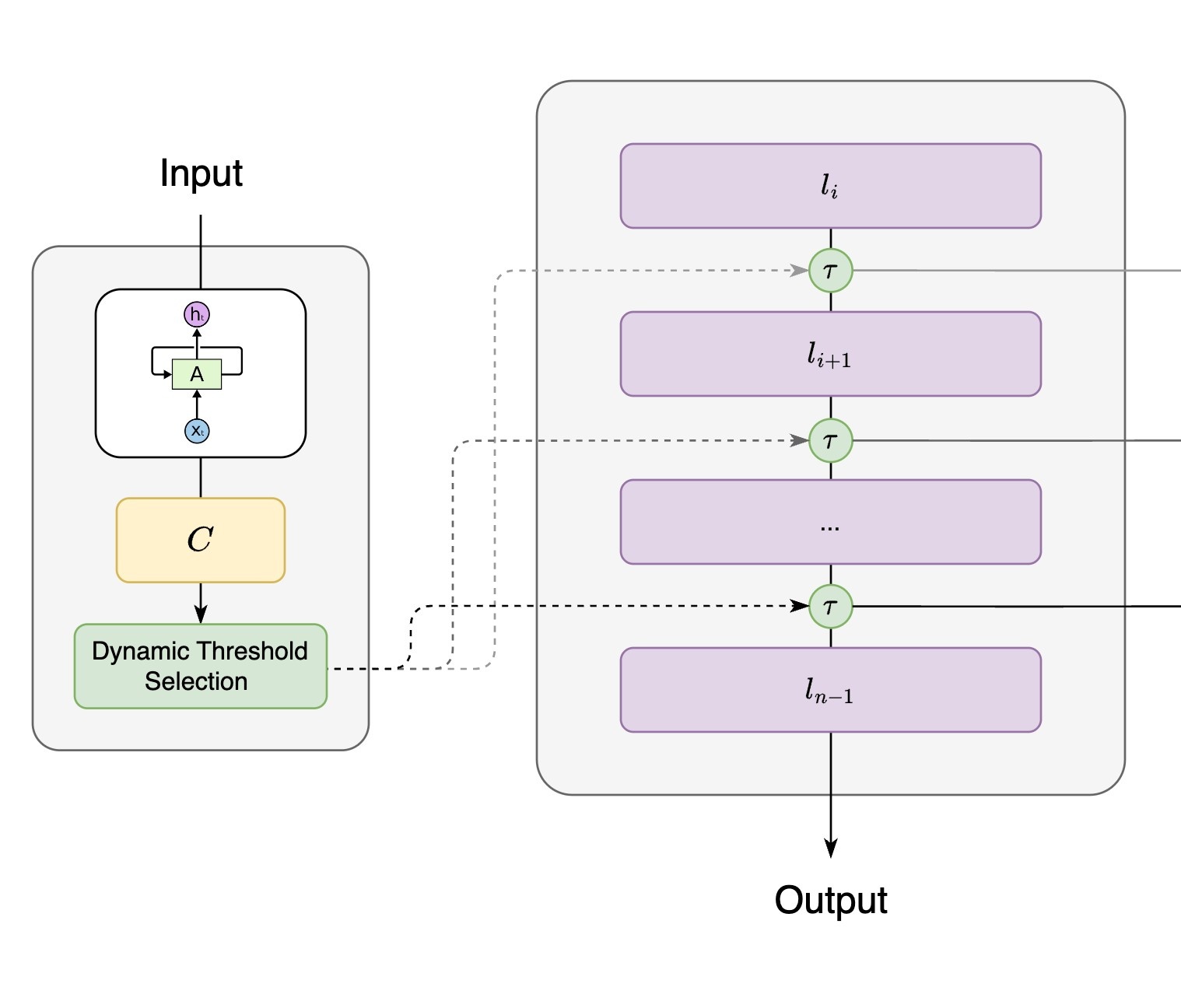

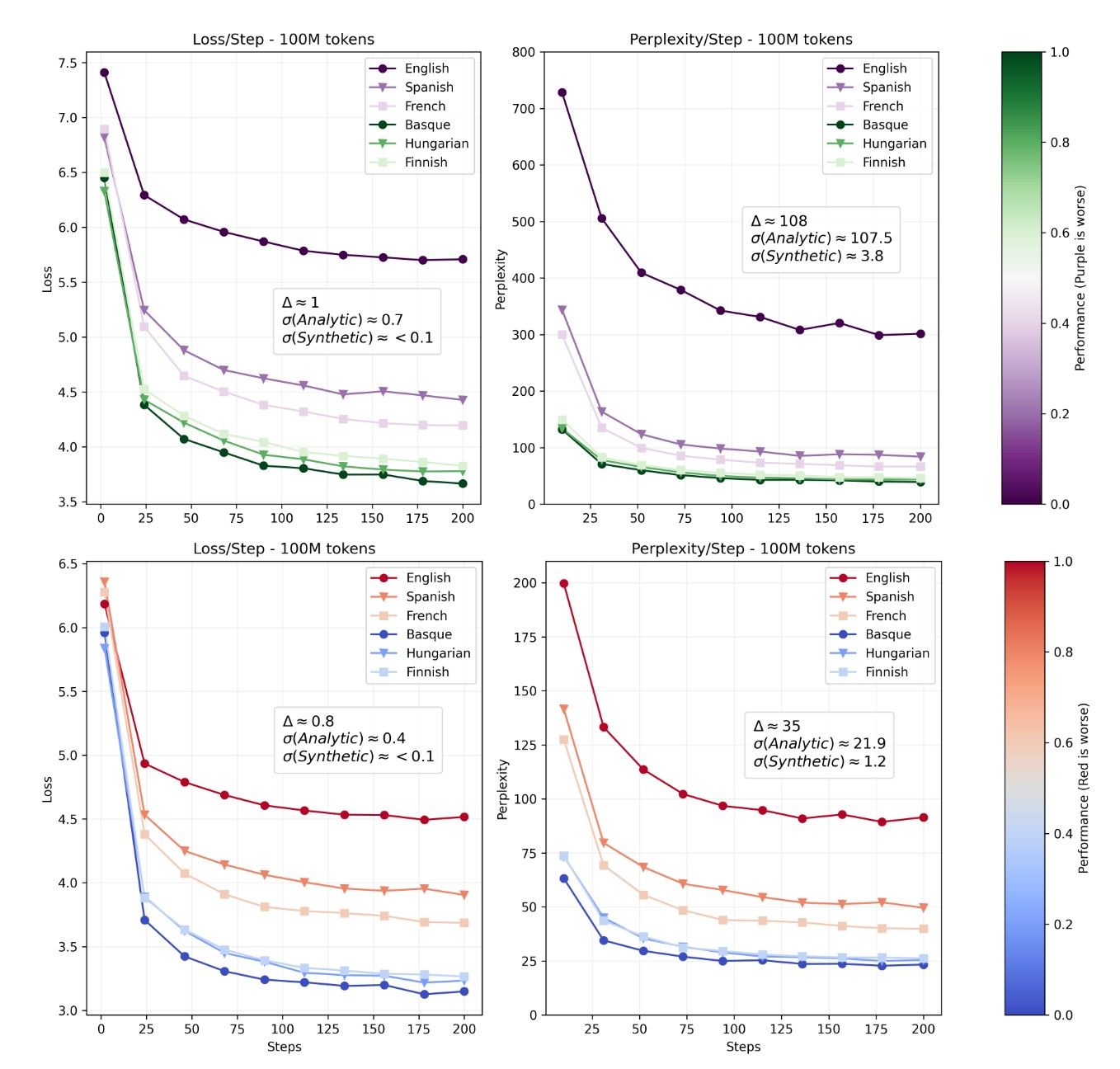

I am a PhD student in Linguistics at UC Berkeley, working at the intersection of computational linguistics, deep learning, and cognitive science. My work examines how humans process language and how these insights can inform the design and evaluation of computational language models. I am particularly interested in using deep learning to study the structure of linguistic representations, both in machines and in the human brain, and in exploring whether modern language models can serve to test hypotheses about human language and cognition.